Github Link: https://github.com/desik1998/MathWithLLMs

Although LLMs are able to do a lot of tasks such as Coding, science etc, they often fail in doing Math tasks without a calculator (including the State of the Art Models).

Our intuition behind why models cannot do Math is because the instructions on the internet are something like a x b = c and do not follow the procedure which we humans follow when doing Math. For example when asked any human how to do 123 x 45, we follow the digit wise multiplication technique using carry, get results for each digit multiplication and then add the corresponding resulting numbers. But on the internet, we don’t show the procedure to do Math and instead just right the correct value. And now given LLMs are given a x b = c, they’ve to reverse engineer the algorithm for multiplication.

Most of the existing Literature gives instructions to the LLM instead of showing the procedure and we think this might not be the best approach to teach LLM.

What this project does?

This project aims to prove that LLMs can learn Math when trained on a step-by-step procedural way similar to how humans do it. It also breaks the notion that LLMs cannot do Math without using calculators. For now to illustrate this, this project showcases how LLMs can learn multiplication. The rationale behind taking multiplication is that GPT-4 cannot do multiplication for >3 digit numbers. We prove that LLMs can do Math when taught using a step-by-step procedure. For example, instead of teaching LLMs multiplication like 23 * 34 = 782, we teach it multiplication similar to how we do digit-wise multiplication, get values for each digit multiplication and further add the resulting numbers to get the final result.

Instruction Tuning: We’ve further done finetuning on OpenAI’s GPT-3.5 to teach Math.

There are close to 1300 multiplication instructions created for training and 200 for validation. The test cases were generated keeping in mind the OpenAI GPT-3.5 4096 token limit. A 5 x 5 digit multiplication can in general fit within 4096 limit but 6 x 6 cannot fit. But if one number is 6 digit, the other can be <= 4 digit and similarly if 1 number is 7 digit then the other can be <= 3 digit.

Also instead of giving * for multiplication and + for addition, different operators’ <<*>> and <<<+>>> are given. The rationale behind this is, using the existing * and + for multiplication and addition might tap on the existing weights of the neural network which doesn’t follow step-by-step instruction and directly give the result for multiplication in one single step.

Results

The benchmarking was done on 200 test cases where each test case has two random numbers generated. For the 200 samples which were tested, excluding for 3 cases, the rest of the cases the multiplication is correct. Which means this overall accuracy is 98.5%. (We’re also looking for feedback from community about how to test this better.)

Future Improvements

- Reach out to AI and open-source community to make this proposal better or identify any flaws.

- Do the same process of finetuning using open-source LLMs.

- Figure out what’s the smallest LLM that can do Math accurately when trained in a procedural manner (A 10 year kid can do Math). Check this for both normal models and distilled models as well.

Requesting for Feedback from AI Community!

I feel like LLMs should just do basic calculations themselves & everything else they should invoke a tool for verification

This reminds me of the scratchpad paper https://arxiv.org/abs/2112.00114.

I wonder if it is possible to use the same technique to make the model better at doing other tasks, like writing novels?

I’ll check this paper. Thanks for sharing btw!

what about the 2.5%?

Can you try one of Yi-34B’s variants?

Impressive work. It always bugged me when I had to use the Wolfram plugin to solve simple math problems. Given that GPT 3.5 is being used, is there any chance that GPT 4 would cover up the remaining 1.5% error?

maybe you need more instructions to hit higher accuracy

I don’t understand the motivation behind this.

Fine, you’ve ran an experiment out of curiosity and you got the result, but why would you want to finetune more language models on this?

It’s not like we need models that are almost as good at things computers are excellent at, while using orders of magnitude more resources.

It would be way more useful to train tiny models to predict when a calculator should be used.

It’s not like we need models that are almost as good at things computers are excellent at, while using orders of magnitude more resources.

one of the arguments for learning math (calculus, linear algebra, etc.) in school is because it supposedly helps you with critical thinking, logic reasoning, etc.

If this can be tested in LLMs then it gives weight to that proposal, because let’s face it, 99% of the population don’t use anything more complicated than exponential equations in their every day lives.

Calculus, linear algebra and mathematics in general is a good idea. Arithmetics is probably not. To me that’s like training LLMs to count up to high numbers correctly. I’m arguing that instead of reading a book on “the first 10^12 natural numbers” one should read a book on linear algebra.

I don’t understand the motivation behind this. It’s not like we need models that are almost as good at things computers are excellent at, while using orders of magnitude more resources. It would be way more useful to train tiny models to predict when a calculator should be used

I’m 100% on the fact that we should use calculators directly instead of LLMs for Math. I mean we humans also use calculators. But the thing is, many claim LLMs cannot do Math etc. This project is just to prove them wrong that LLMs when learnt the process especially in case of Math can do it. I mean if we consider the LLM to be the brain, it should ideally be able to master Math similar to how human brain does right

Fine, you’ve ran an experiment out of curiosity and you got the result, but why would you want to finetune more language models on this?

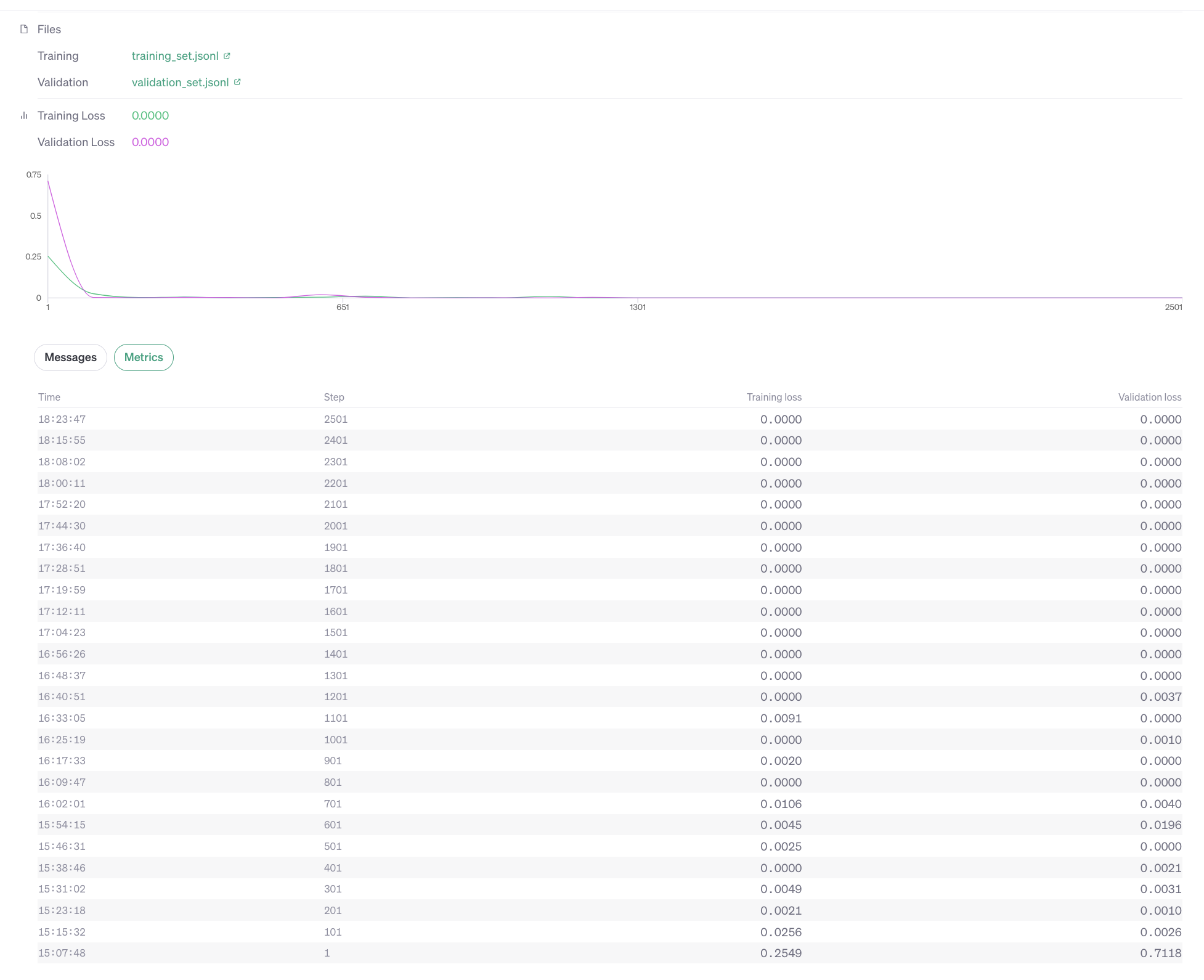

I mentioned this in Github Repo but missed out in the Reddit post. But here is the rationale: When initially tested using incontext learning for Gpt4, the model was able to follow the procedure showed in the 1shot prompting but it was sometimes failing with the overall result due to OpenAI’s addition technique. And given this is a procedural technique, doing a n-shot prompting would lead to even more tokens. Given these limitations, it was evident that finetuning would solve these issue. And also the model was able to quickly learn the procedure and the overall training and validation loss of model went close to 0 within 0.1 epochs

I mean if we consider the LLM to be the brain, it should ideally be able to master Math similar to how human brain does right

Except it’s not a brain. It’s, at best, a tiny portion of a brain’s processing, hence the move toward multi-modal models.

It’s a bit like trying to do poetry with your visual cortex, or math using your autonomic system. I mean, more strength to you, but while you can use a hammer on a screw, it doesn’t mean you should.

Yes agree Multimodal is the go to way but the current LLMs which are text based are also building a world view

I need this kind of data to fine tune my bot. But the most challenging part I’ve faced is division. Which unfortunately is not included in your project.

Yeah for division, I need to think from scratch but if possible, we can discuss how this whole thing can work for division

Some might find this recent paper interesting that took a similar approach for logic puzzles.

Forcing probabilistic models to engage in determinatic processes is perhaps the most fantastic form of thumb twiddling we have yet invented.

I did something similar a little while ago, but with a small 10M parameter model trained from scratch. I didn’t get around to doing multiplication, but I could reliably add and subtract numbers up to six digits. One thing I found was getting it to show working also improved its performance when not showing working.

the title is misleeading. it is not doing math but doing multiplication.

Agree